What is DreamOmni2?

DreamOmni2 is an open-source artificial intelligence model designed to handle two complex tasks: multimodal instruction-based image editing and generation. This means the model can understand instructions given through both text and images, allowing users to edit existing images or create entirely new ones based on specific guidance. The model goes beyond traditional approaches by supporting both concrete objects (like people, animals, and physical items) and abstract concepts (such as textures, artistic styles, materials, makeup looks, and design patterns).

Released in October 2025 by researchers from leading institutions, DreamOmni2 represents a significant step forward in making AI image generation and editing more intuitive and accessible. The model was developed to address common limitations found in existing tools, where users often struggled to communicate their exact intentions using text alone. By accepting reference images alongside text instructions, DreamOmni2 bridges the gap between what users envision and what they can express in words.

The model is built on a unified framework, meaning it can handle both editing and generation tasks without requiring separate tools or workflows. This integration makes DreamOmni2 particularly valuable for creative professionals, researchers, and hobbyists who need flexible, powerful tools for visual content creation. The project is released under the Apache 2.0 license, making it freely available for research and commercial use in most regions.

Overview of DreamOmni2

| Feature | Description |

|---|---|

| AI Model | DreamOmni2 |

| Category | Multimodal Image Editing and Generation |

| Primary Functions | Instruction-based editing, Subject-driven generation, Style transfer |

| Input Types | Text instructions and reference images |

| Research Paper | arxiv.org/abs/2510.06679 |

| Code Repository | github.com/dvlab-research/DreamOmni2 |

| License | Apache 2.0 |

| Release Date | October 2025 |

Understanding the Two Core Tasks

Multimodal Instruction-based Generation

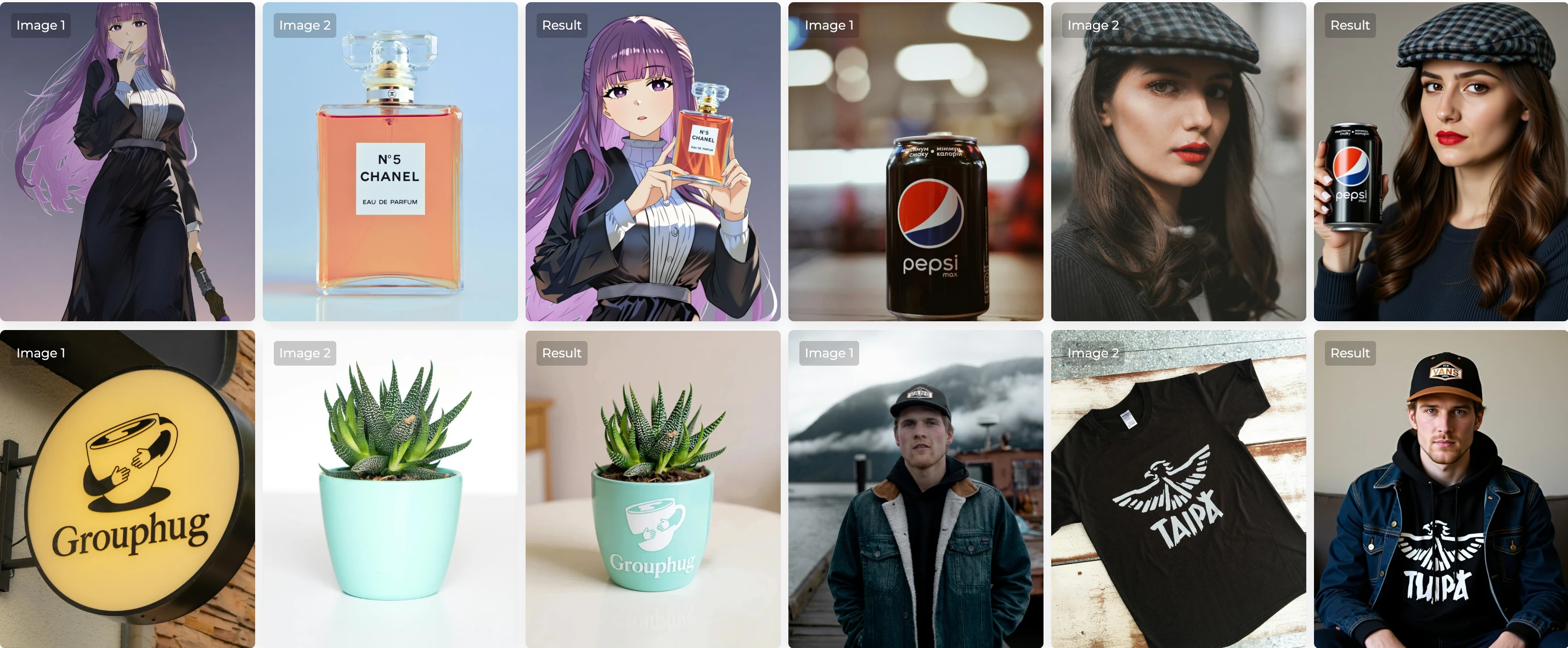

The generation task focuses on creating new images from scratch based on user instructions. Traditional subject-driven generation systems could only work with concrete objects, such as placing a specific person or product into a new scene. DreamOmni2 expands this capability significantly by supporting abstract attributes as well.

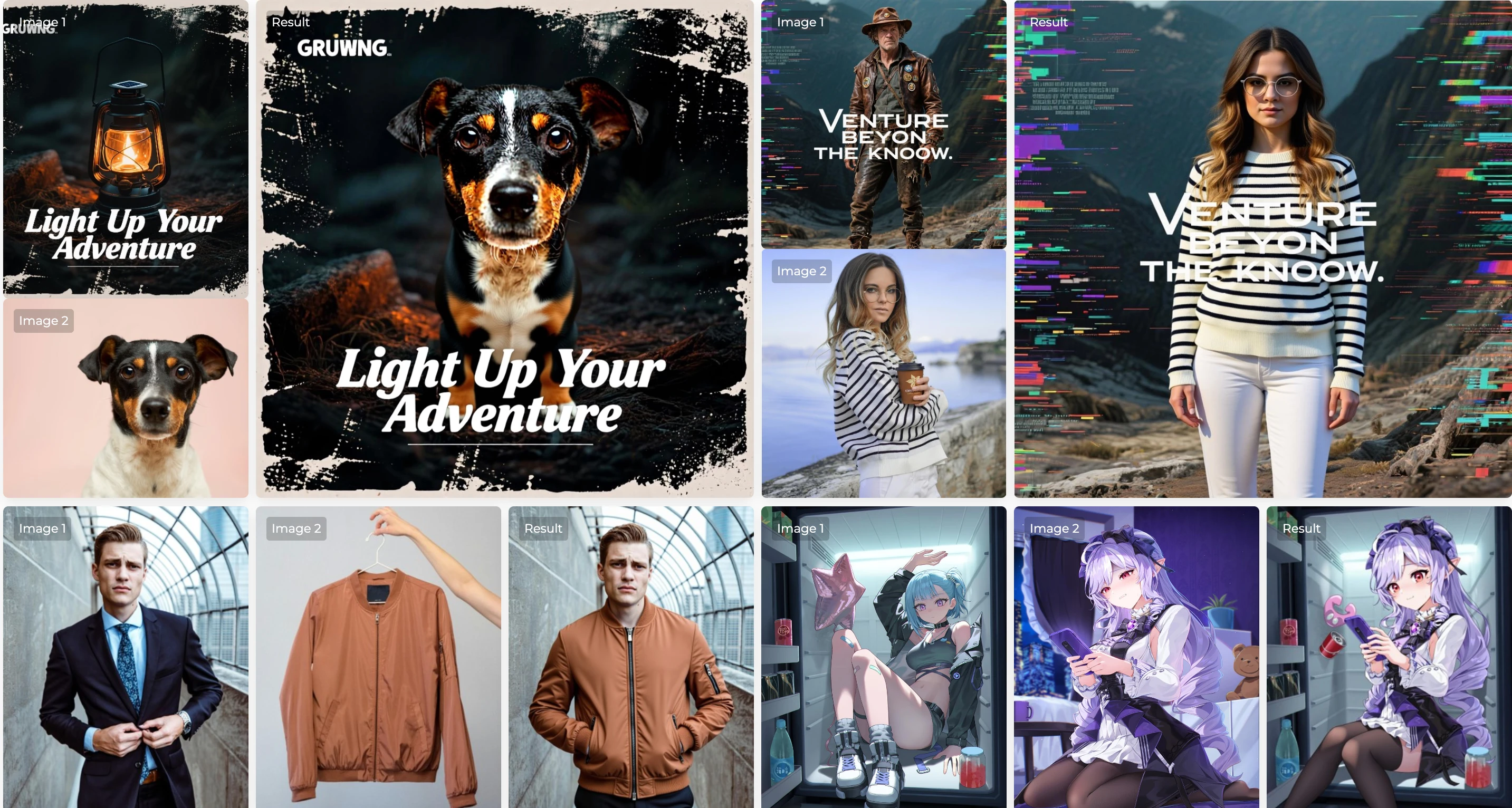

For concrete objects, the model excels at maintaining identity and pose consistency. If you provide a reference image of a person and ask the model to place them in a different setting, DreamOmni2 will preserve their facial features, body proportions, and general appearance while adapting them to the new context. This capability is particularly useful for applications like virtual try-ons, character design, and product visualization.

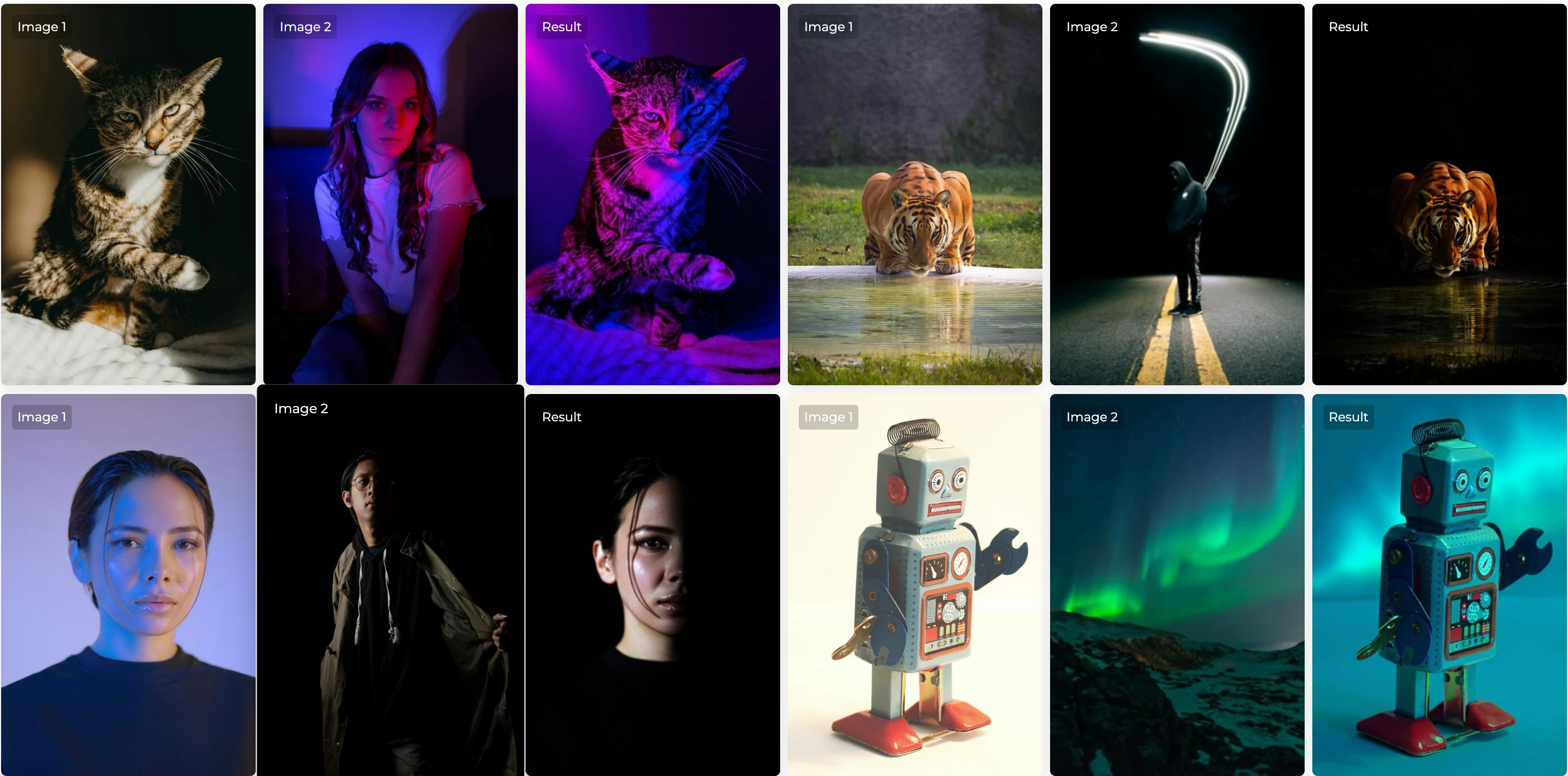

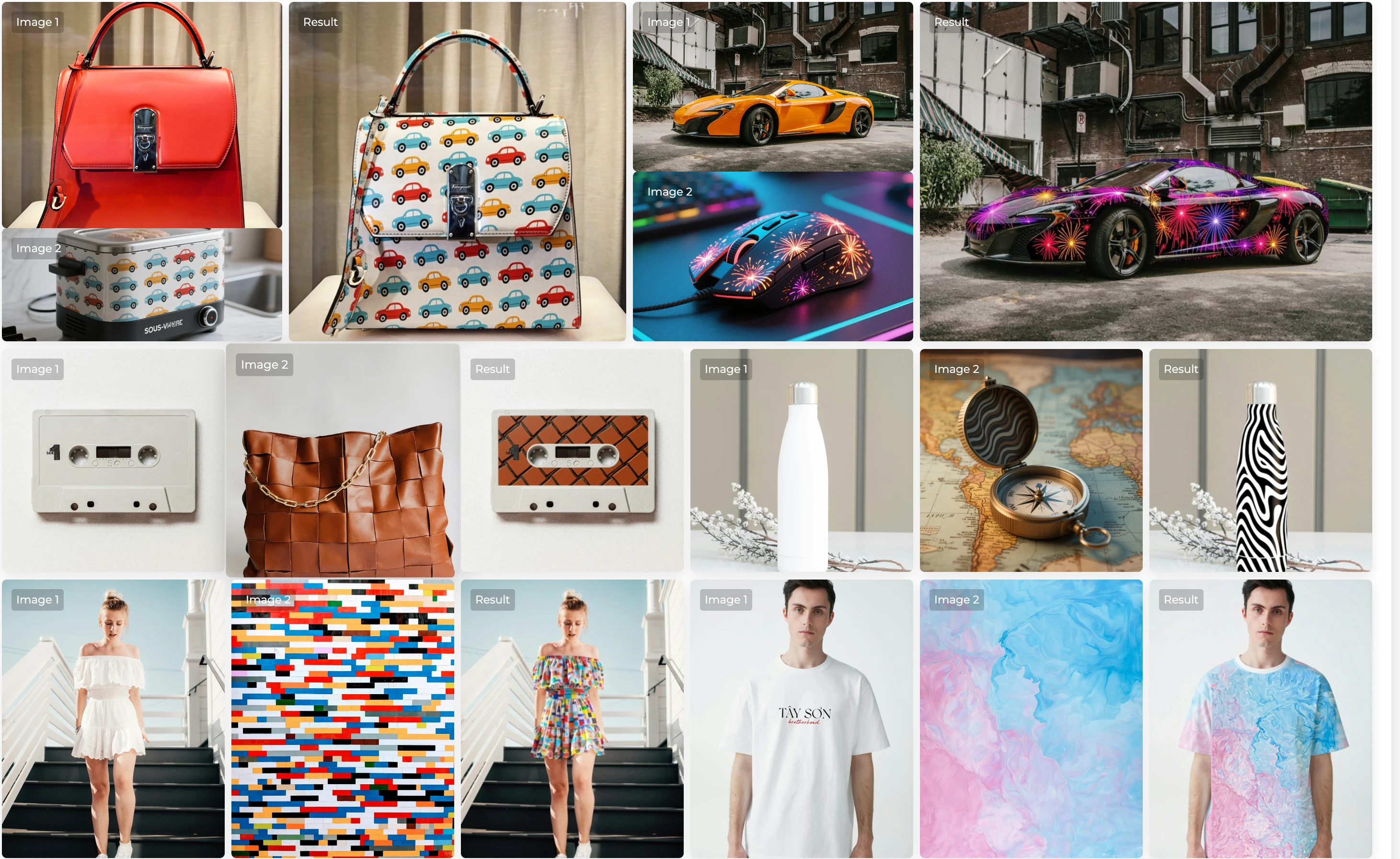

The real innovation comes with abstract attributes. Users can now reference elements like material textures (such as velvet, marble, or wood grain), artistic styles (impressionism, watercolor, or sketch), makeup looks, hairstyles, design patterns, and even lighting conditions. For example, you could show the model an image of a fabric texture and ask it to apply that texture to a piece of furniture, or reference a painting style and request that style be applied to a portrait. This flexibility opens up countless creative applications that were previously difficult or impossible to achieve with text-only instructions.

Multimodal Instruction-based Editing

Image editing presents different challenges than generation. When editing an existing image, the model must preserve all areas that should remain unchanged while making precise modifications to specific regions. Traditional instruction-based editing relied purely on text descriptions, which often proved inadequate for conveying specific visual details.

DreamOmni2 addresses this limitation by accepting reference images alongside text instructions. This multimodal approach allows for much more precise editing. For instance, if you want to change someone's outfit in a photo, you can provide a reference image showing the exact clothing item you want, rather than trying to describe it in words. The model will then replace the outfit while maintaining the original pose, lighting, and background.

The editing capabilities extend to both concrete and abstract concepts. You can replace objects, change materials, modify styles, adjust lighting, alter facial expressions, transform hairstyles, and apply various artistic effects. The model handles these edits while maintaining strict consistency in non-edited areas, which is crucial for professional applications where precision matters.

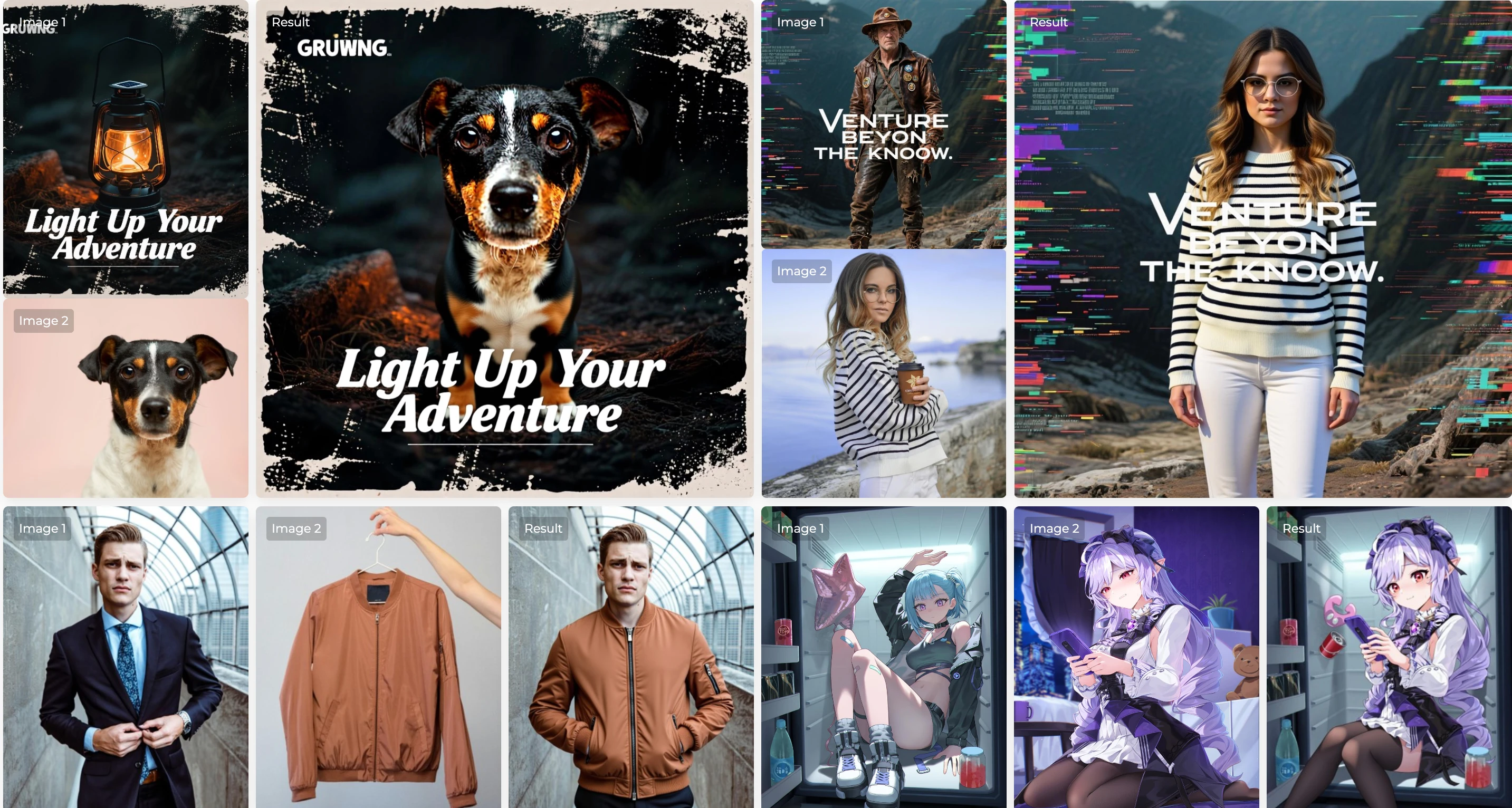

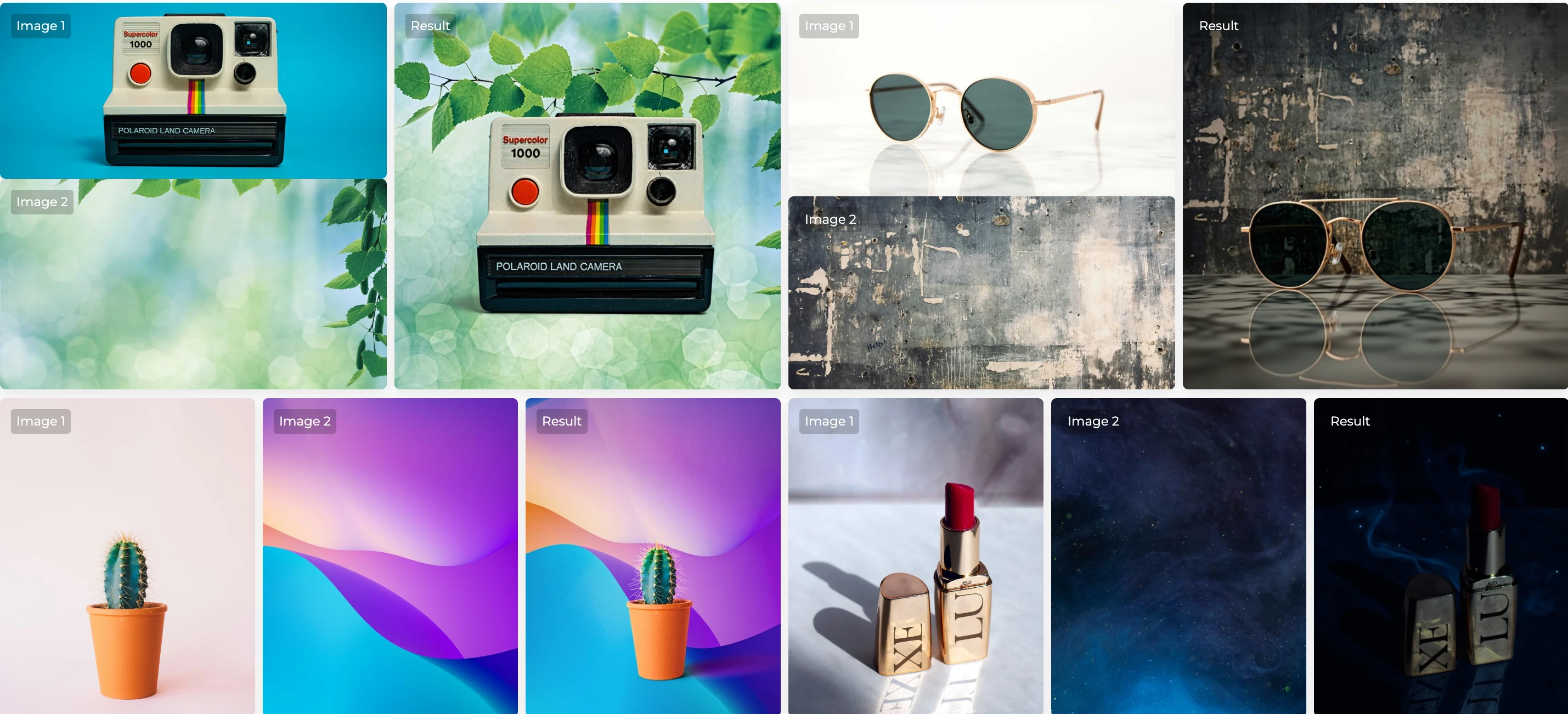

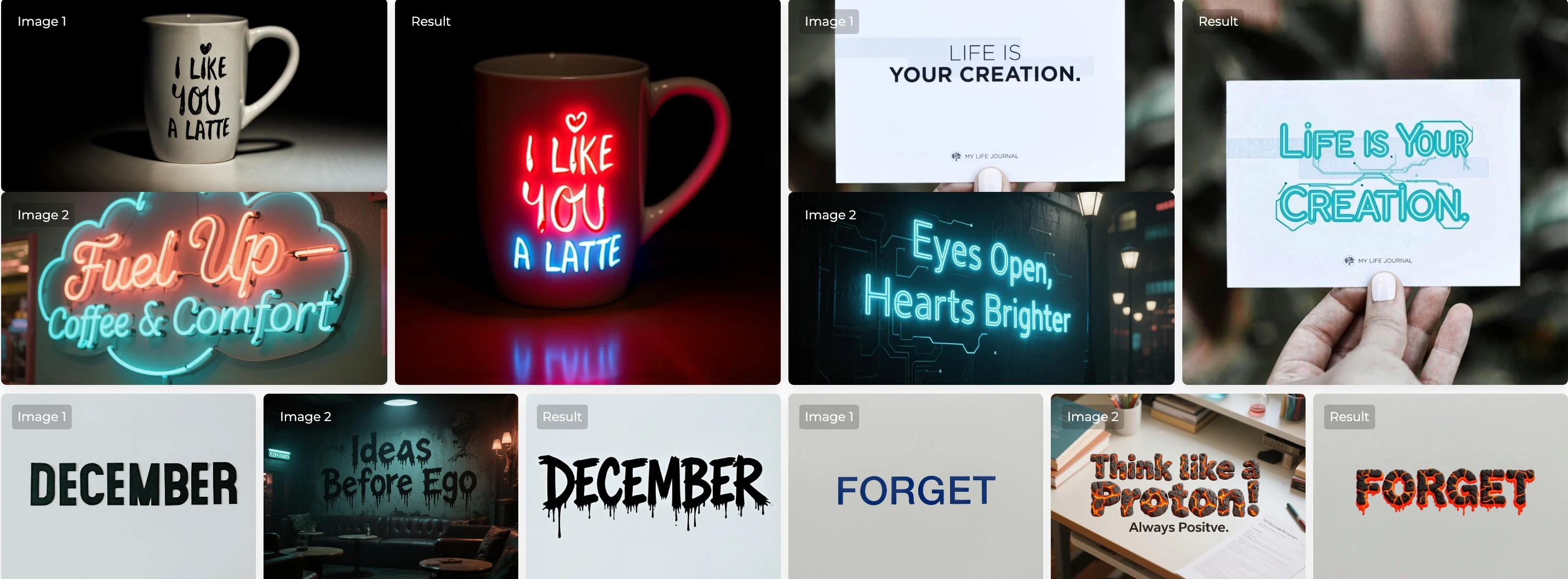

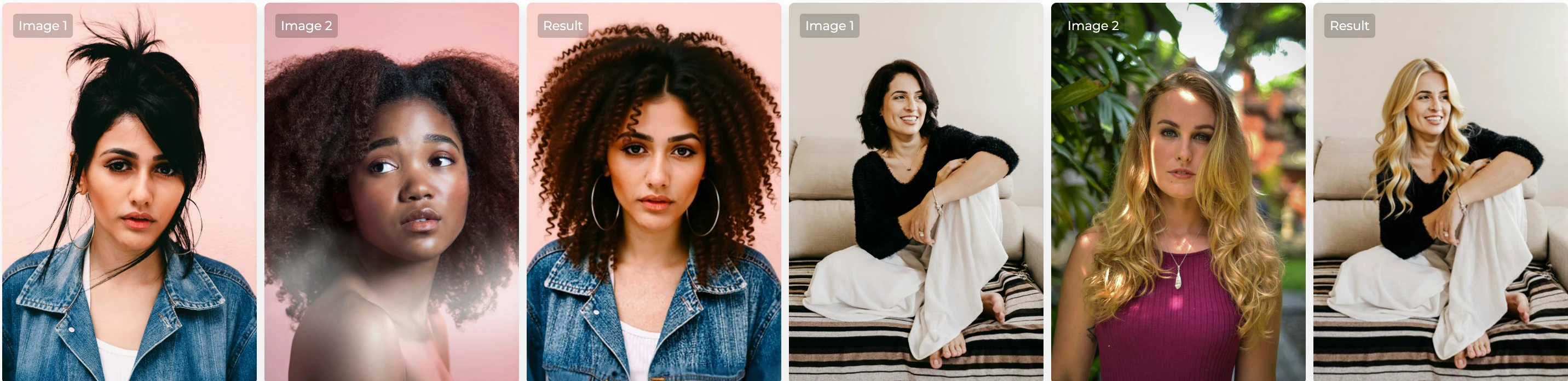

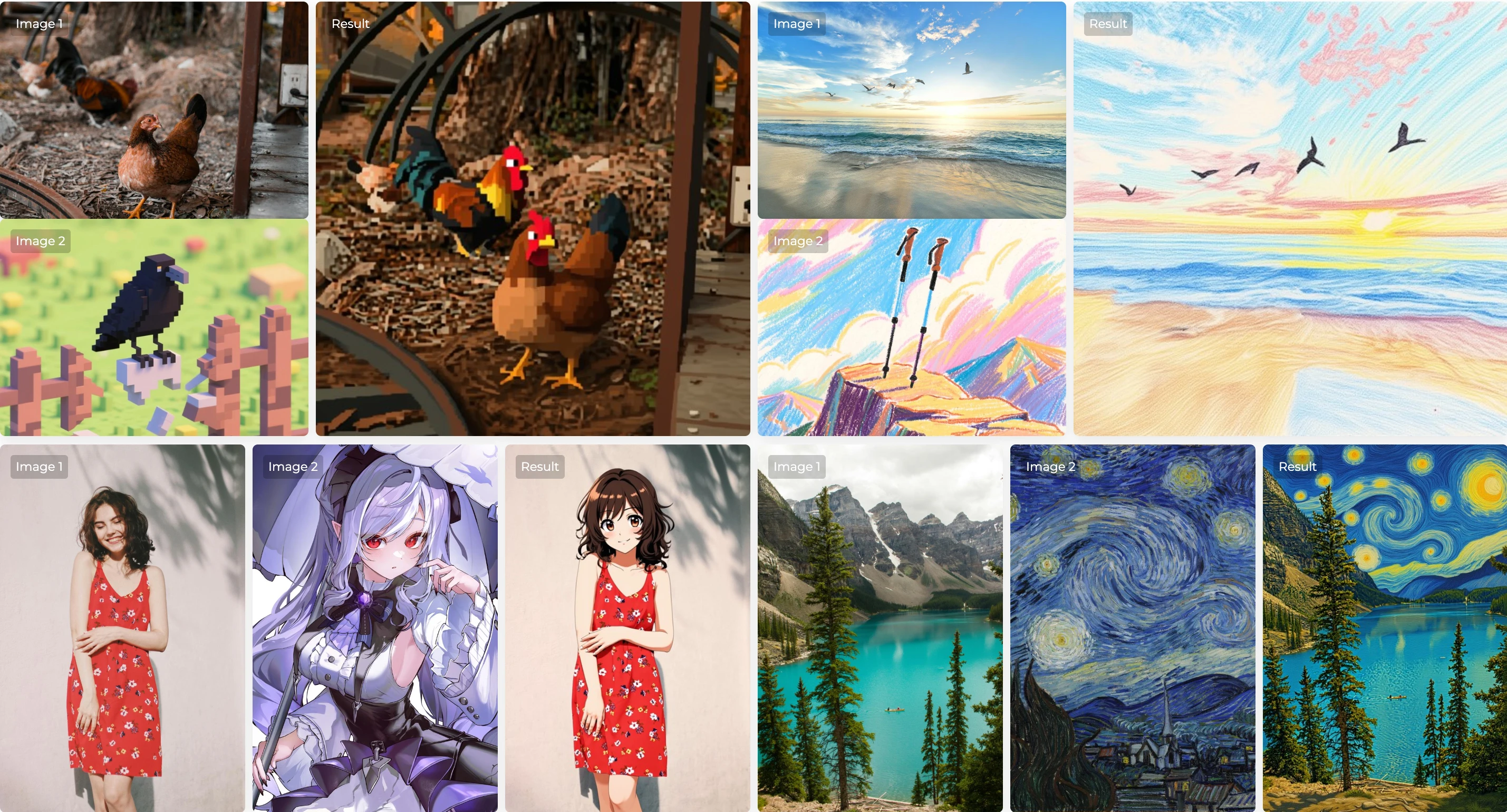

DreamOmni2 Visual Demo

Background Replace

Background Replace Face Expression Editing

Face Expression Editing Font Replacement

Font Replacement Hair Style Editing

Hair Style Editing In-Context Generation

In-Context Generation Lighting Rendering

Lighting Rendering Object Replacement

Object Replacement Pattern Imitation

Pattern Imitation Pose Imitation

Pose Imitation Style Transfer

Style TransferKey Features of DreamOmni2

Unified Model Architecture

DreamOmni2 combines editing and generation capabilities into a single framework. This unified approach simplifies workflows and allows users to switch between tasks without changing tools or retraining models. The architecture is built to handle complex instructions efficiently while maintaining high-quality output across different use cases.

Multimodal Instruction Processing

The model accepts both text and image inputs simultaneously. This multimodal capability allows users to provide detailed guidance that would be difficult to express through text alone. The system includes an index encoding and position encoding shift scheme that helps distinguish between multiple input images and prevents pixel confusion during processing.

Abstract Concept Support

DreamOmni2 can work with abstract attributes like textures, materials, styles, and patterns. This capability goes beyond what most current models offer, enabling use cases such as material transfer, style application, and artistic effect reproduction. The model can extract these abstract concepts from reference images and apply them to new contexts intelligently.

High Identity Consistency

When working with concrete objects, particularly people and characters, the model maintains strong identity consistency. Facial features, body proportions, and distinctive characteristics are preserved across different poses and settings. This makes the model particularly valuable for character animation, virtual photography, and personalized content creation.

Precise Editing Control

The editing capabilities maintain strict boundaries between modified and unmodified regions. Non-edited areas remain pixel-perfect identical to the source image, which is essential for professional work. The model understands spatial relationships and can make localized changes without affecting the overall composition.

Joint Training with Vision Language Model

DreamOmni2 incorporates joint training with a Vision Language Model to better process complex instructions. This integration helps the model understand nuanced requests and handle sophisticated editing tasks that require deep comprehension of both visual and textual information.

Multi-Image Input Support

The model can accept multiple reference images in a single instruction. This capability allows for complex operations like combining elements from different sources, applying multiple style references simultaneously, or using several examples to guide the output. The system handles up to four reference images effectively.

Open Source Availability

Released under the Apache 2.0 license, DreamOmni2 is freely available for research and commercial applications. The complete codebase, model weights, and training data pipeline are publicly accessible, allowing developers to integrate the technology into their projects or build upon it for new applications.

Technical Approach

The development of DreamOmni2 involved addressing two primary challenges: creating appropriate training data and designing a model framework capable of handling multimodal inputs effectively.

Data Synthesis Pipeline

The research team developed a three-step data synthesis pipeline to generate training examples. First, they used a feature mixing method to create extraction data for both abstract and concrete concepts. This process involved carefully separating visual attributes from source images to build a comprehensive dataset of extractable features.

Second, they generated multimodal instruction-based editing training data using the editing and extraction models. This step created examples showing how images should be modified based on various combinations of text and image instructions. The dataset includes diverse scenarios covering object replacement, style transfer, material changes, and other editing operations.

Third, they applied the extraction model to create additional training data for multimodal instruction-based generation. This process ensured the model could learn to create new images from scratch while following complex multimodal guidance.

Model Framework Design

To handle multiple image inputs effectively, the team implemented an index encoding and position encoding shift scheme. This mechanism helps the model distinguish between different input images and prevents confusion when processing multiple visual references simultaneously. Each image receives unique positional information that guides the model in understanding which elements to extract from which source.

The framework also includes joint training with a Vision Language Model. This integration improves the model's ability to parse complex instructions that involve both visual and textual information. The VLM component helps translate user intent into actionable processing steps, making the system more responsive to natural language requests.

Applications and Use Cases

Object Replacement

Replace people, products, or objects in images while maintaining natural lighting and composition. Useful for e-commerce, advertising, and content creation.

Style Transfer

Apply artistic styles from reference images to new content. Transform photographs into paintings, apply illustration styles to designs, or match the aesthetic of existing artwork.

Material and Texture Application

Change the materials of objects in images. Apply fabric textures to clothing, modify surface finishes on products, or transform architectural elements with different materials.

Character Creation and Animation

Generate consistent character appearances across different poses and settings. Useful for animation, game design, virtual avatars, and storytelling.

Virtual Try-On

Show products on different people or in various contexts. Apply makeup, change hairstyles, or demonstrate clothing items on different body types.

Design Iteration

Rapidly prototype design variations by changing colors, patterns, materials, and styles. Accelerate the creative process for product design, interior design, and fashion.

Photo Editing and Enhancement

Make targeted edits to photographs based on reference examples. Adjust lighting conditions, modify backgrounds, change expressions, or apply specific visual effects.

Understanding Editing vs Generation

DreamOmni2 treats editing and generation as distinct tasks, each with specific requirements and outputs. Understanding this distinction helps users choose the appropriate mode for their needs.

Editing mode requires strict consistency in preserving non-edited areas of the source image. When you edit an image, everything except the specifically modified regions should remain exactly as it was. This precision is crucial for professional workflows where maintaining the original context and composition matters. The editing process focuses on making targeted changes while respecting the integrity of the source material.

Generation mode, in contrast, only needs to retain identity, intellectual property, or specific attributes from the reference images as specified in the instructions. The entire output image can be regenerated from scratch, with emphasis placed on creating an aesthetically pleasing result that incorporates the referenced elements naturally. Generation mode offers more creative freedom since it is not constrained by preserving specific pixels from a source image.

The research team separated these tasks because they found that instructions for editing and generation are often similar in form but different in intent. By explicitly distinguishing between the two modes, DreamOmni2 makes it easier for users to communicate their exact needs and get appropriate results. This separation also allows the model to optimize its processing for each specific task type.

Advantages and Limitations

Advantages

- Supports both concrete and abstract concept references

- Unified model handles multiple tasks

- Strong identity and pose consistency

- Precise editing with preserved non-edited areas

- Accepts multiple reference images

- Open source and freely available

- Comparable to commercial models in many tasks

- Flexible multimodal instruction system

Limitations

- Requires proper hardware for local deployment

- Processing time depends on output resolution

- Quality varies with instruction complexity

- Limited to single output per instruction

- May require experimentation to achieve desired results